What Is Mediasoup?

Mediasoup is an open-source SFU (Selective Forwarding Unit) implementation written in C++ with Node.js bindings. It’s production-ready and used by companies building real-time communication platforms.

Unlike a full conferencing solution (like Jitsi or Kurento), mediasoup is a lower-level building block. You use it as the media engine, then build the rest (signaling, UI, authentication) yourself.

Your Application ↓mediasoup (Media Routing) ↓WebRTC ↔ ClientsThis gives you complete control and flexibility.

Part 1: Understanding SFU Through Mediasoup

What Mediasoup Does

Mediasoup receives audio and video streams from endpoints and selectively forwards them to other endpoints.

Client A sends stream → mediasoup → forwards to B, C, DClient B sends stream → mediasoup → forwards to A, C, DClient C sends stream → mediasoup → forwards to A, B, DClient D sends stream → mediasoup → forwards to A, B, CEach client:

- Sends one stream (audio + video)

- Receives multiple streams (from all other clients)

- Can choose which streams to receive (selective)

- Can choose spatial and temporal layers (quality/bandwidth control)

This is the SFU model: receive multiple streams, process them client-side, display as you wish.

Why Mediasoup Over MCU?

MCU (Multipoint Control Unit) would:

- Mix all streams into one

- Cause high latency (processing)

- Require powerful servers

Mediasoup just routes. Minimal processing. Low latency.

MCU: Client A + B + C → Mix → Compose → Single output → All clientsSFU: Client A → Forward → All clients (separately) Client B → Forward → All clients (separately) Client C → Forward → All clients (separately)Part 2: The Architecture — Workers and Routers

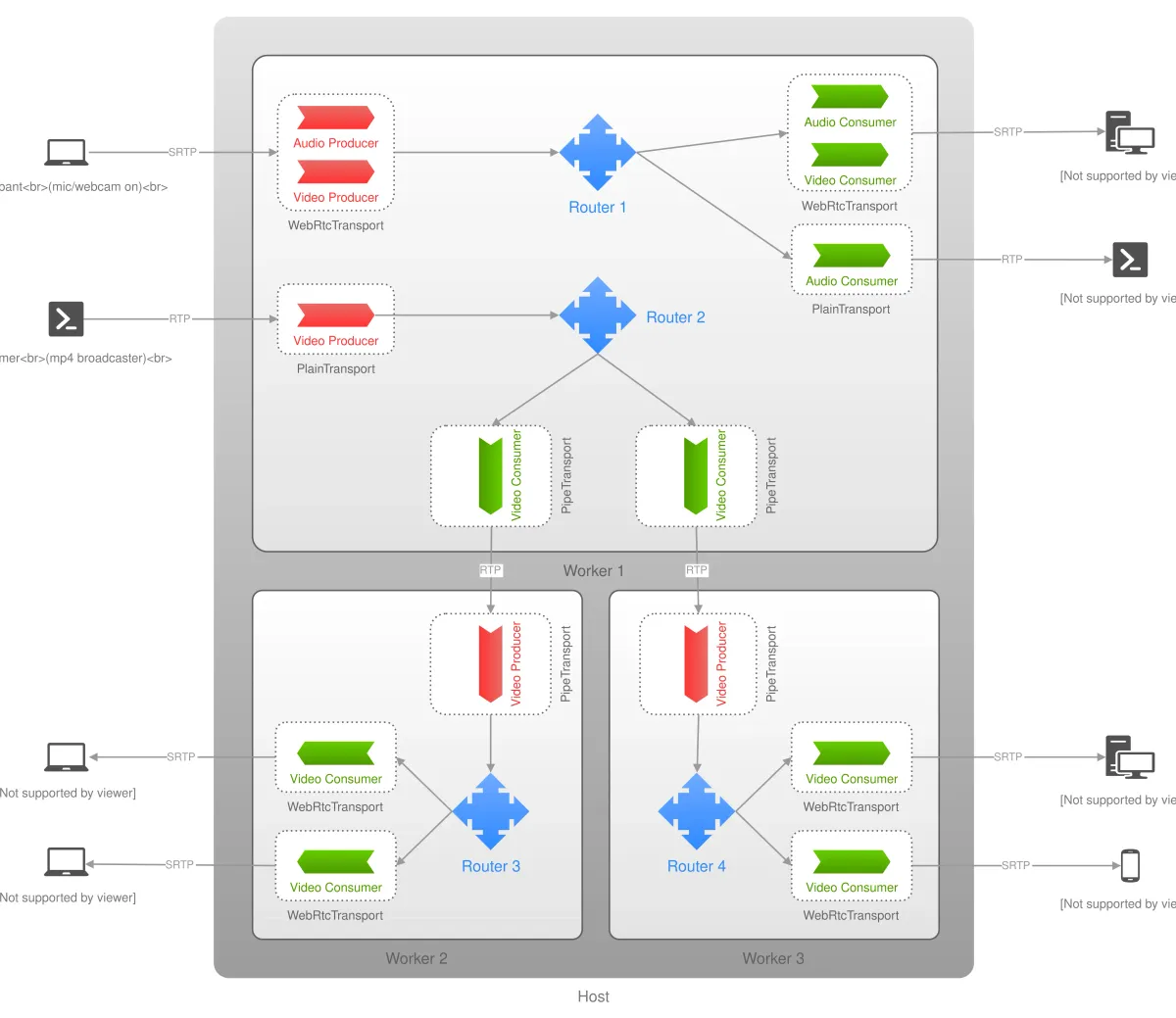

Worker Model

Mediasoup uses a worker pool model:

┌─────────────────────────────────────────┐│ Node.js Application (Single-threaded) │└────────────┬────────────────────────────┘ │ ┌────────┴────────┬──────────┬──────────┐ │ │ │ │ ▼ ▼ ▼ ▼[Worker 0] [Worker 1] [Worker 2] [Worker 3](CPU Core 0) (CPU Core 1)(CPU Core 2)(CPU Core 3) │ │ │ │ └─────────────────┴──────────┴──────────┘ (Each handles routers)Key concept: Each worker consumes one CPU core.

If your server has 8 cores, keep 8 workers ready. Each handles media routing independently.

import mediasoup from 'mediasoup';

const workers: mediasoup.Worker[] = [];

async function createWorkers(numWorkers: number) { for (let i = 0; i < numWorkers; i++) { const worker = await mediasoup.createWorker({ logLevel: 'warn', logTags: ['rtp', 'rtcp'], rtcMinPort: 40000, rtcMaxPort: 40100, });

worker.on('died', () => { console.error(`Worker ${i} died, restart it`); // Restart logic here });

workers.push(worker); } return workers;}Router: The Media Hub

When a meeting starts, you create a router on a worker:

const router = await selectedWorker.createRouter({ mediaCodecs: [ { kind: 'audio', mimeType: 'audio/opus', clockRate: 48000, }, { kind: 'video', mimeType: 'video/VP8', clockRate: 90000, }, ],});The router is responsible for:

- Receiving streams from producers

- Forwarding to consumers

- Managing transports

- Handling media codec negotiation

One router per meeting. All participants in a meeting use the same router.

Note

Important: A router is tied to a worker, which is tied to a CPU core. If you have 1000 concurrent meetings and 8 workers, you’ll distribute ~125 meetings per worker. This is why worker pool design is critical for scaling.

Part 3: Peers, Transports, Producers, and Consumers

What Is a “Peer”?

Tricky part: Mediasoup doesn’t have a native “peer” concept. A peer is an application-level abstraction.

Correct way to think about it:

interface Peer { id: string; // Unique peer ID userId: string; // Associated user account websocket: WebSocket; // WebSocket connection (for signaling) sendTransport?: mediasoup.Transport; // For sending media recvTransport?: mediasoup.Transport; // For receiving media producers: Map<string, mediasoup.Producer>; // Audio, video producers consumers: Map<string, mediasoup.Consumer>; // Consuming from others dataProducer?: mediasoup.DataProducer; // DataChannel producer dataConsumers: Map<string, mediasoup.DataConsumer>;}A peer is metadata that ties together:

- User identity

- WebSocket connection (signaling)

- Mediasoup transports, producers, consumers

const peers = new Map<string, Peer>();

// When user connects via WebSocketwebsocket.on('message', async (msg) => { const { peerId, action, data } = JSON.parse(msg); let peer = peers.get(peerId);

if (!peer) { peer = { id: peerId, userId: data.userId, websocket, producers: new Map(), consumers: new Map(), dataConsumers: new Map(), }; peers.set(peerId, peer); }

// Handle actions (join, produce, consume, etc.)});Part 4: The Critical Transport Concept

Why Two Separate Transports?

Tricky part: Many developers think one transport handles both sending and receiving.

Wrong:

const transport = await router.createWebRtcTransport();// Try to both produce and consume on the same transport// This doesn't work as expected!Correct:

Mediasoup requires separate transports:

- Send Transport: For producing media (audio/video)

- Receive Transport: For consuming media

// Create send transport on serverconst sendTransport = await router.createWebRtcTransport({ listenInfos: [{ ip: '0.0.0.0', announcedAddress: 'example.com' }], enableSctp: true, // For DataChannel numSctpStreams: { OS: 1024, MIS: 1024 },});

// Create recv transport on serverconst recvTransport = await router.createWebRtcTransport({ listenInfos: [{ ip: '0.0.0.0', announcedAddress: 'example.com' }], enableSctp: true, numSctpStreams: { OS: 1024, MIS: 1024 },});

peer.sendTransport = sendTransport;peer.recvTransport = recvTransport;Why two transports?

- Asymmetry in WebRTC: Sending and receiving use different SDP negotiations

- Independent control: You can pause sending without affecting receiving

- Better resource management: Clearer separation of concerns

Creating Transports on the Client

The client-side must mirror the server-side transports:

// Client-side TypeScriptimport * as mediasoupClient from 'mediasoup-client';

const device = new mediasoupClient.Device();

// After loading device RTP capabilitiesconst sendTransport = device.createSendTransport({ id: serverSendTransport.id, iceParameters: serverSendTransport.iceParameters, iceCandidates: serverSendTransport.iceCandidates, dtlsParameters: serverSendTransport.dtlsParameters,});

const recvTransport = device.createRecvTransport({ id: serverRecvTransport.id, iceParameters: serverRecvTransport.iceParameters, iceCandidates: serverRecvTransport.iceCandidates, dtlsParameters: serverRecvTransport.dtlsParameters,});

// Handle connect event (DTLS handshake)sendTransport.on('connect', async ({ dtlsParameters }, callback, errback) => { try { // Signal to server to complete DTLS handshake await signalServer('connectTransport', { transportId: sendTransport.id, dtlsParameters, }); callback(); // Confirm to mediasoup-client } catch (error) { errback(error); }});Note

Common mistake: Not handling the “connect” event properly. The client won’t complete the DTLS handshake unless you signal the server from the connect event handler.

Part 5: Producing Media (Sending Video/Audio)

The Flow: Client → Server

Step 1: Client gets media stream

const stream = await navigator.mediaDevices.getUserMedia({ audio: true, video: { width: { ideal: 1280 }, height: { ideal: 720 } },});

const audioTrack = stream.getAudioTracks()[0];const videoTrack = stream.getVideoTracks()[0];Step 2: Client produces on send transport

// Produce audioconst audioProducer = await sendTransport.produce({ track: audioTrack, encodings: [ { maxBitrate: 128000, // 128 kbps for audio }, ], codecOptions: { opusNoiseSuppression: true, },});

// Produce videoconst videoProducer = await sendTransport.produce({ track: videoTrack, encodings: [ { maxBitrate: 5000000, // 5 Mbps for video dtx: false, }, ], codecOptions: { videoGoogleStartBitrate: 1000, },});

// Store for laterpeer.producers.set('audio', audioProducer);peer.producers.set('video', videoProducer);Step 3: Transport emits “produce” event

The transport emits this event when media is first sent:

sendTransport.on('produce', async ({ kind, rtpParameters }, callback, errback) => { try { // Signal server to create server-side Producer const producerId = await signalServer('produce', { transportId: sendTransport.id, kind, rtpParameters, }); callback({ id: producerId }); // Confirm to mediasoup-client } catch (error) { errback(error); }});Step 4: Server creates Producer

// On server, handle produce signalconst producer = await peer.sendTransport.produce({ kind, rtpParameters,});

peer.producers.set(kind, producer);

// Notify all other peers that this peer produced mediabroadcast('newProducer', { peerId: peer.id, producerId: producer.id, kind,});Note

Timing is important: The “produce” event fires after transport.produce() is called. You must signal the server before returning from the event. The client waits for your callback.

Part 6: Consuming Media (Receiving Video/Audio)

The Flow: Server → Client

Important: Consuming is reverse order. Server initiates, client responds.

Step 1: Other peers receive “newProducer” notification

When peer A produces, all other peers are notified about peer A’s producer.

Step 2: Server creates Consumer

Peer B’s server-side code:

async function subscribeToProducer(peerB: Peer, producerId: string) { // Check if peer B can consume this producer if (!router.canConsume({ producerId, rtpCapabilities: peerB.device.rtpCapabilities, })) { throw new Error('Cannot consume this producer'); }

// Create consumer on peer B's recv transport const consumer = await peerB.recvTransport.consume({ producerId, rtpCapabilities: peerB.device.rtpCapabilities, paused: true, // Start paused! });

peerB.consumers.set(consumer.id, consumer);

return { id: consumer.id, producerId, kind: consumer.kind, rtpParameters: consumer.rtpParameters, };}

// Signal peer B about the new consumerawait signalClient(peerB, 'newConsumer', { id: consumer.id, producerId, kind: consumer.kind, rtpParameters: consumer.rtpParameters,});Step 3: Client creates Consumer

recvTransport.on('connect', async ({ dtlsParameters }, callback, errback) => { // Same as send transport await signalServer('connectTransport', { transportId: recvTransport.id, dtlsParameters, }); callback();});

// Handle newConsumer notificationwebsocket.on('newConsumer', async (msg) => { const { id, producerId, kind, rtpParameters } = msg;

const consumer = await recvTransport.consume({ id, producerId, kind, rtpParameters, });

// Store the consumer peer.consumers.set(id, consumer);

// Get the track and attach to video element const track = consumer.track; const videoElement = document.createElement('video'); videoElement.srcObject = new MediaStream([track]); videoElement.play();

document.body.appendChild(videoElement);

// Resume the consumer (server paused it) await signalServer('resumeConsumer', { consumerId: id }); await consumer.resume();});Step 4: Server resumes Consumer

// Handle resume signalconst consumer = peer.consumers.get(consumerId);await consumer.resume();Note

Critical mistake: Starting consumers in “paused” state is recommended. Resume them after client confirms they’re created. This prevents video freeze or audio glitches. The docs recommend it, but many developers skip this step.

Part 7: Spatial and Temporal Layers (Quality Control)

What Are Layers?

When a client sends video, it can use simulcast or SVC (Scalable Video Coding):

Simulcast: Send multiple resolutions simultaneously - High: 1280x720 @ 30fps - Medium: 640x360 @ 30fps - Low: 320x180 @ 30fps

SVC: Single stream with nested quality layers - Temporal: 30fps, 15fps, 7.5fps - Spatial: 1280x720, 640x360, 320x180The producer sends all layers. The consumer (on server) can select which layers to forward:

// Producer sends with simulcastconst videoProducer = await sendTransport.produce({ track: videoTrack, encodings: [ { maxBitrate: 5000000, rid: 'h' }, // High { maxBitrate: 1000000, rid: 'm' }, // Medium { maxBitrate: 300000, rid: 'l' }, // Low ], codecOptions: { videoGoogleStartBitrate: 1000, },});

// Server-side consumer can select preferred layersconst consumer = await peer.recvTransport.consume({ producerId, rtpCapabilities: peer.device.rtpCapabilities,});

// Set preferred layers (e.g., medium resolution)consumer.setPreferredLayers({ spatialLayer: 1, // Medium (0=low, 1=medium, 2=high) temporalLayer: 1, // 15fps});

// Listen for actual layer changesconsumer.on('layerschange', (layers) => { console.log('Effective layers:', layers); // Notify client about actual layers being sent});This is how Zoom handles bandwidth optimization: it downgrades layers for slow clients.

Part 8: Data Channels (Non-Media Communication)

Producing Data (Sending)

For things like chat messages, you can use DataChannels:

// Client produces dataconst dataProducer = await sendTransport.produceData({ ordered: true, maxRetransmits: 3,});

peer.dataProducer = dataProducer;

// Send data through itdataProducer.send(JSON.stringify({ type: 'chat', message: 'Hello!', timestamp: Date.now(),}));Consuming Data (Receiving)

// Server creates data consumerconst dataConsumer = await peer.recvTransport.consumeData({ dataProducerId, paused: true,});

peer.dataConsumers.set(dataConsumer.id, dataConsumer);

// Handle incoming datadataConsumer.on('message', (msg) => { const data = JSON.parse(msg); console.log('Received:', data);});

dataConsumer.on('open', () => { console.log('DataChannel opened');});Note

Use cases for DataChannels:

- Chat messages

- Typing indicators

- Pointer/cursor sharing

- Game state sync

- Metadata exchange

Anything that’s not audio/video but needs low-latency delivery.

Part 9: The Tricky Part — Signaling Synchronization

Common Mistake: Forgetting to Signal Both Sides

Many developers create mediasoup objects but forget to tell the other side:

Wrong:

// Server creates consumerconst consumer = await transport.consume({ producerId, rtpCapabilities,});// Oops, forgot to signal the client!// Client has no idea consumer was created// Video won't appearCorrect:

// Server creates consumerconst consumer = await transport.consume({ producerId, rtpCapabilities, paused: true,});

// Signal client about new consumerawait sendSignal(peerId, 'newConsumer', { id: consumer.id, producerId, kind: consumer.kind, rtpParameters: consumer.rtpParameters,});

// Client receives signal and creates matching consumer// Client calls resume when readyAnother Mistake: Not Handling Close Events

Wrong:

// Server closes a producerawait producer.close();// Client doesn't know producer closed// Client's consumer becomes staleCorrect:

// Listen for close events and notify clientproducer.on('transportclose', () => { // Transport closed, all producers/consumers on it are closed broadcast('producerClosed', { producerId: producer.id });});

// Client receives close signalwebsocket.on('producerClosed', async ({ producerId }) => { const consumer = findConsumerByProducerId(producerId); await consumer.close(); // Clean up client-side});The core principle: Mediasoup doesn’t auto-sync between client and server. You must signal every action.

Part 10: Troubleshooting Common Issues

Issue 1: “Producer is closed” Error

Cause: Producer closed on server, but client tried to produce again.

Solution: Listen to producer events and handle closure:

audioProducer.on('transportclose', () => { console.log('Transport closed, producer is dead'); setProducerActive(false);});

audioProducer.on('score', (score) => { // Monitor producer quality if (score.score < 5) { console.warn('Poor producer quality'); }});Issue 2: “No suitable codec” Error

Cause: Client’s RTP capabilities don’t match router’s codecs.

Solution: Ensure device is loaded properly:

const device = new mediasoupClient.Device();

// Load device with router's RTP capabilitiesawait device.load({ routerRtpCapabilities: routerCapabilities,});

// Now device knows what codecs are supportedconst sendTransport = device.createSendTransport({ id: serverTransport.id, // ...});Issue 3: Video Freezes or Choppy Audio

Cause: Consumer’s preferred layers don’t match producer’s capabilities.

Solution: Check consumer state:

// Monitor consumer statsconsumer.on('statschange', (stats) => { console.log('Consumer stats:', stats); // Check jitter, packet loss, bitrate if (stats.jitter > 100) { console.warn('High jitter'); }});

// Check if consumer is pausedif (consumer.paused) { console.log('Consumer is paused'); // Might need to resume}Part 11: The Connection Flow (End-to-End)

Let’s trace a complete flow:

// 1. User A joins meetingwebsocket.emit('joinMeeting', { meetingId, userId: 'A' });

// 2. Server creates router and transports for Aconst router = await worker.createRouter({ mediaCodecs });const sendTransportA = await router.createWebRtcTransport();const recvTransportA = await router.createWebRtcTransport();

// 3. Server sends transports to client Awebsocket.emit('joinedMeeting', { sendTransport: { id: sendTransportA.id, iceParameters: sendTransportA.iceParameters, // ... }, recvTransport: { // ... },});

// 4. Client A creates local transportsconst sendTransport = device.createSendTransport(/* server data */);const recvTransport = device.createRecvTransport(/* server data */);

// 5. Client A connects sendTransport (DTLS handshake)sendTransport.on('connect', async ({ dtlsParameters }, callback) => { await signalServer('connectTransport', { transportId: sendTransport.id, dtlsParameters, }); callback();});

// 6. User B joins meetingwebsocket.emit('joinMeeting', { meetingId, userId: 'B' });

// 7. Server creates transports for Bconst sendTransportB = await router.createWebRtcTransport();const recvTransportB = await router.createWebRtcTransport();

// 8. Server notifies A about new peer Bwebsocket.emit('newPeer', { peerId: 'B' });

// 9. User A produces videoconst videoProducer = await sendTransport.produce({ track: videoTrack, encodings: [{ maxBitrate: 5000000 }],});

// 10. sendTransport emits "produce" eventsendTransport.on('produce', async ({ kind, rtpParameters }, callback) => { const producerId = await signalServer('produce', { transportId: sendTransport.id, kind, rtpParameters, }); callback({ id: producerId });});

// 11. Server creates producer on A's sendTransportconst producerA = await sendTransportA.produce({ kind: 'video', rtpParameters,});

// 12. Server notifies all peers (including B) about A's producerbroadcast('newProducer', { peerId: 'A', producerId: producerA.id, kind: 'video',});

// 13. Server creates consumer on B's recvTransportconst consumerA_onB = await recvTransportB.consume({ producerId: producerA.id, rtpCapabilities: rtpCapabilitiesB, paused: true,});

// 14. Server signals B about A's videowebsocket.emit('newConsumer', { id: consumerA_onB.id, producerId: producerA.id, kind: 'video', rtpParameters: consumerA_onB.rtpParameters,});

// 15. Client B creates consumer on recvTransportconst consumer = await recvTransport.consume({ id: consumerA_onB.id, producerId: producerA.id, kind: 'video', rtpParameters,});

// 16. B's track is ready, attach to video elementconst videoElement = document.createElement('video');videoElement.srcObject = new MediaStream([consumer.track]);videoElement.play();

// 17. Client B resumes consumerawait consumer.resume();websocket.emit('resumeConsumer', { consumerId: consumerA_onB.id });

// 18. Server resumes consumerconst consumer = peersB.consumers.get(consumerId);await consumer.resume();

// 19. Video flows: A → mediasoup router → B// Success!That’s the complete flow.

Part 12: Production Considerations

Monitoring

Monitor these metrics:

// Worker CPU usageworker.on('died', () => { console.error('Worker crashed'); // Restart logic});

// Producer qualityproducer.on('score', (score) => { metrics.recordProducerScore(producer.id, score.score);});

// Consumer statssetInterval(async () => { const stats = await consumer.getStats(); metrics.recordConsumerStats(consumer.id, stats);}, 5000);Scaling

For 1000+ concurrent users:

- Multiple workers: 1 per CPU core

- Load balancing: Distribute users across workers

- Router pooling: Reuse routers across meetings

- Memory management: Close transports/consumers cleanly

- Database: Track user → router → worker mappings

Security

- Authenticate WebSocket connections

- Validate user permissions before allowing consume

- Rate limit signaling messages

- Use TLS for all connections

Conclusion: Mediasoup Is Powerful But Requires Care

Mediasoup is an excellent choice for building video conferencing. It’s:

- Low-level (you control everything)

- High-performance (forwarding, not mixing)

- Scalable (worker model)

- Flexible (you build the app layer)

But it requires understanding:

- The SFU model

- Producer/Consumer flows

- Transport mechanics

- Signaling synchronization

- Event handling

The most common mistakes:

- Forgetting to signal both client and server

- Not handling close events

- Not starting consumers in paused state

- Misunderstanding separate send/recv transports

- Not monitoring producer/consumer quality

Master these, and you can build production-grade video conferencing systems.

References

Now go build something amazing.